About OpenGradient

OpenGradient is the network for open intelligence - a decentralized AI platform that enables developers to host, execute, and verify AI models at scale. Access verifiable LLM inference through x402, upload models to the decentralized Model Hub, deploy services with the Python SDK, and create personalized AI experiences with MemSync - all with built-in verification through cryptographic proof and attestation.

What is OpenGradient?

OpenGradient is the network for open intelligence - an end-to-end decentralized infrastructure for AI model hosting, secure execution, agentic reasoning, and application deployment.

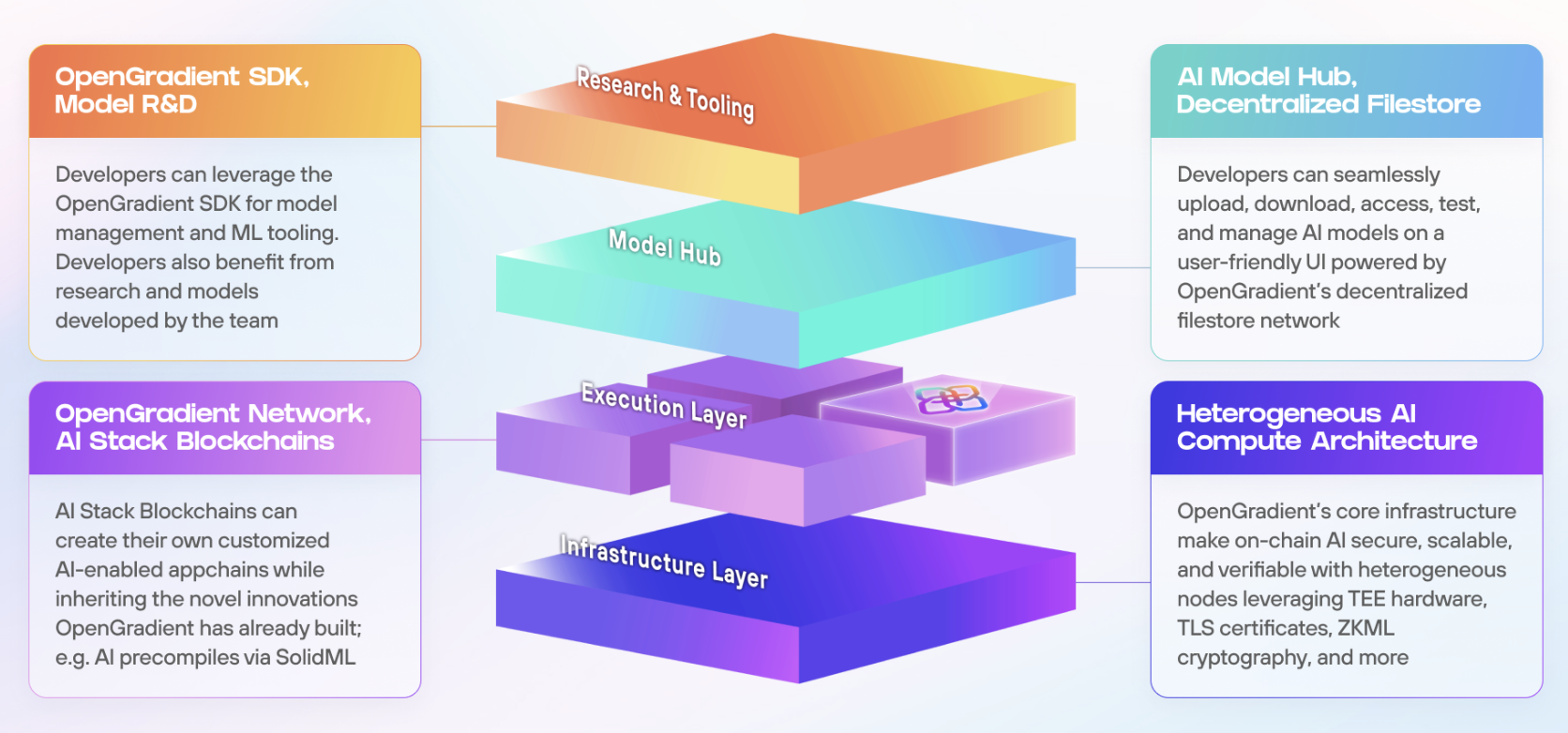

OpenGradient's novel heterogeneous compute architecture enables permissionless AI inference, statistical analysis, data processing, and agent execution to be securely and scalably run end-to-end with verifiable guarantees. The platform powers developers with the ability to seamlessly harness AI models and leverage compute for features like AI agents, risk management, DeFi mechanism design, and more.

The OpenGradient Network

The OpenGradient Network is the decentralized foundation that powers all OpenGradient products. It provides:

Verifiable AI Execution: All inference runs within Trusted Execution Environments (TEEs), providing cryptographic attestation that proves exactly which models and prompts were used.

Decentralized Infrastructure: A permissionless network of compute nodes that enables anyone to host and execute AI models without centralized gatekeepers.

**

OPG` testnet tokens on Base Sepolia, with execution and verification handled by the OpenGradient network. On-chain ML (Coming Soon): ZKML-verified on-chain inference for smart contract integration, currently in development on our alpha testnet.

TIP

You can find the OpenGradient network explorer at https://explorer.opengradient.ai.

Products

OpenGradient offers a suite of products that let you interact with the network for specific use cases - from running verifiable LLM inference to hosting models and building AI applications with persistent memory.

x402 LLM Inference: The x402 protocol enables payment-gated HTTP APIs for LLM inference. Access models from OpenAI, Anthropic, Google, xAI, and others through OpenGradient's TEE-verified infrastructure, paid for with

$OPGtestnet tokens on Base Sepolia.Model Hub: A decentralized model repository built on Walrus storage that allows anyone to permissionlessly upload, browse, and use AI models. The Model Hub supports all model architectures from linear regression to neural networks, LLMs, and stable diffusion models.

MemSync: A long-term memory layer for AI built on OpenGradient's verifiable LLM inference. MemSync uses TEE-verified execution to automatically extract meaningful memories from conversations, classify them intelligently, and generate user profiles. All memory operations benefit from OpenGradient's cryptographic guarantees, enabling AI applications to maintain context across sessions with full transparency.

Python SDK: A comprehensive toolkit for building applications with OpenGradient's infrastructure. The SDK enables LLM inference, model management, and seamless integration with the Model Hub. Available via

pip install opengradientwith a CLI tool for easy access and iteration.

What can you do with OpenGradient?

You can build anything with OpenGradient. Here are some examples:

Verifiable LLM Applications: Access LLMs from OpenAI, Anthropic, Google, and xAI through TEE-verified inference. Get cryptographic proof of which prompts were used, enabling transparent verification of AI agent actions and decision-making.

AI Agents with Provable Actions: Create LLM-backed AI agents where you can cryptographically prove which prompt was used to take a specific action, enabling full transparency and auditability for autonomous agent decisions.

Web Applications: Build secure AI applications using the Python SDK that leverage OpenGradient's verified and decentralized inference infrastructure, ensuring full integrity and security of AI models and inferences.

Personalized AI Applications: Build AI applications with persistent memory and user personalization using MemSync, which uses OpenGradient's verifiable LLM inference for memory extraction and user profile generation. Create chatbots, assistants, and context-aware applications that remember user preferences and maintain long-term context across sessions.

Model Hosting: Upload AI and ML models to the decentralized Model Hub for permissionless access and distribution.

Coming Soon

AI-enabled smart contracts are under development on our alpha testnet. This will enable:

Dynamic DeFi Applications: Build AMMs with dynamic fee models, lending pools with ML-based risk calculation, or other DeFi protocols that adapt based on model predictions.

Automated ML Workflows: Deploy automated workflows that execute on a schedule with live oracle data, enabling continuous model inference and decision-making without manual intervention.

Composable AI Systems: Chain multiple models together to create composable AI model ensembles or mixture-of-expert applications with ZKML and TEE verification.